MAN CODE STRATOSPHERE

The nature of the project itself (refining something down to a point and then using that point as a foundation for extrapolation/creation) was quite inspiring. I became interested in using this somewhat meta reference to the assignment process as a conceptual starting point for a making process. That is, I wanted to examine identity (one of the first things that came to mind when I discovered my word) as a concept by recycling the process of refining then extrapolating, and apply it to my media.

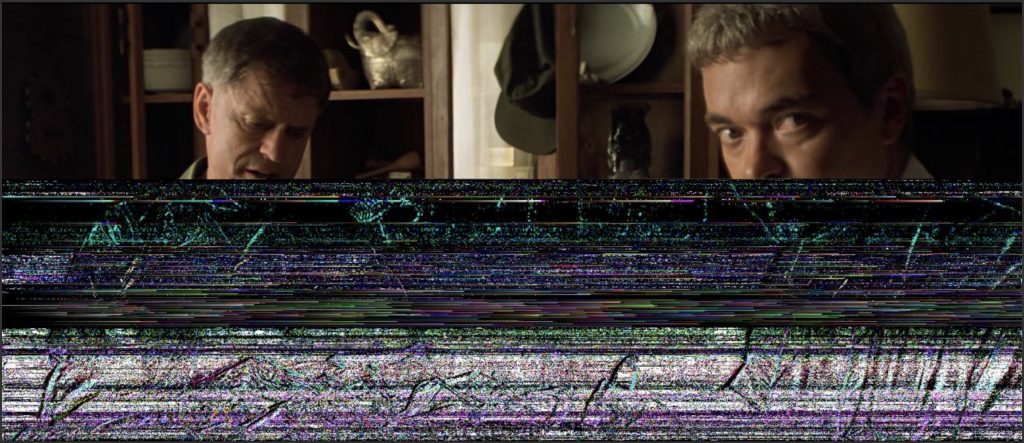

I felt this was particularly relevant given the media that was generated by my process. Apocalypse Now Redux (2001) is an entity that is both entirely new and a facsimile of something else (Apocalypse Now (1979)). As mentioned above, when I found MAN as my word, one of the first things that came to mind for me were notions of identity (particularly surrounding gender and masculinity). I was interested in the performative nature of masculinity, and how those presenting as male navigated their identities and their surroundings, and, perhaps most importantly, the perceptions of that identity.

Thinking about identity led me back into my thoughts about extrapolation/creation, and how I might be able to apply this to my word and media to generate an artwork. I chose to focus on the still image of the media, and find a way to break that down and recreate something else. I thought an interesting way to do this would be through examining the pixels (the atoms/foundation/identity of the image), and seeing if I could use that data to make something else, or present something that would be viewed as something else.

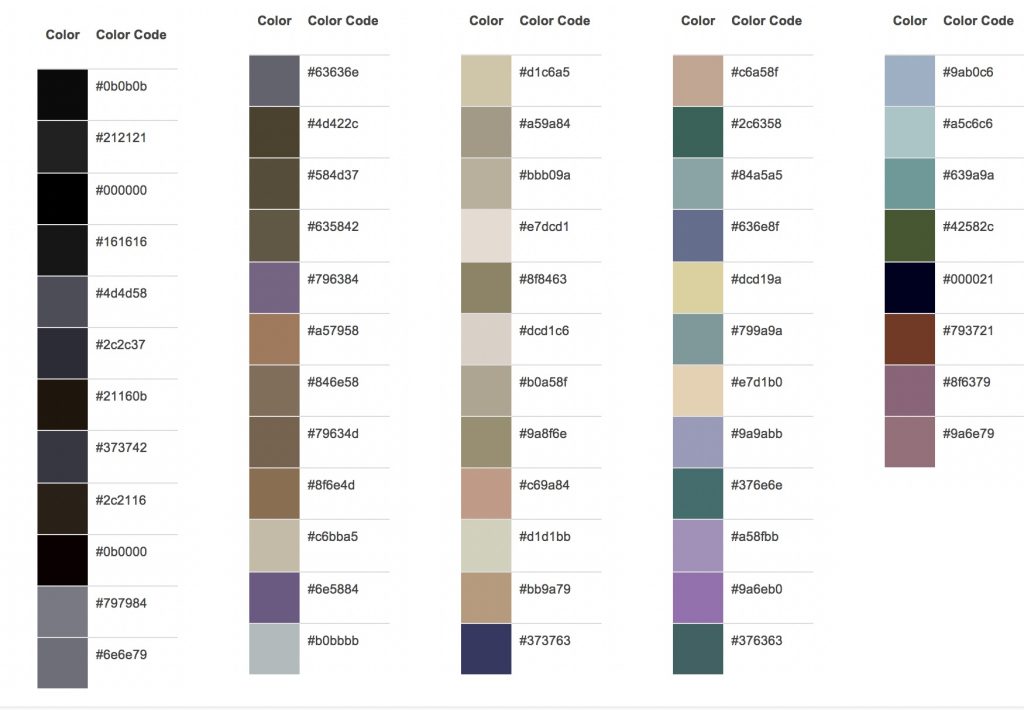

I want to create a new entity, so I used an online colour extractor (another serendipitous crossover here in terms of etymology and concept) to pull out the pixel colours present in the above image, which is the frame where Lieutenant General Corman utters the word MAN. There were several numerical variables to use the extractor, so I decided to use the numbers that had repeatedly appeared in the earlier stages of this project – 533 and 11. This determined how many colours were extracted from the image, that will appear in the form of their hex numbers (a six digit code that represents a specific colour, usually based on RGB values, associated with HTML coding).

After discussions with Paul and the group, I decided to explore a process that was suggested to further underline the connection between my developing concept, my media and my word, MAN. Paul suggested exploring changing the identity of the media/image further (i.e. simply changing it from 2D image to sound) by adding the word MAN into the code that made up the image.

A classmate from the group, Michael, who has (much) more of a background in video/image/media than I do, helped me find an online hex code generator that would upload my image and convert it to code for me to change. I decided to follow the initial process, where I would feed an image into a colour extractor; however, I decided to add a step where I would use the online hex editor to edit the code of the image (randomly inserting MAN into the code) prior to feeding the image to the colour extractor.

I decided to again reference the numbers that were so significant to the beginning of this assignment. I decided to add MAN to the code 11 times, in random places. The edited code generated this image:

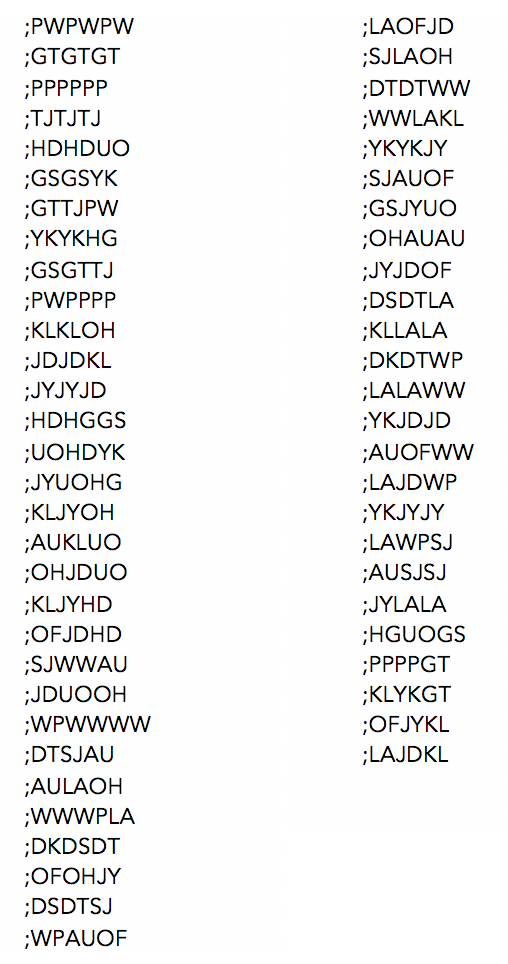

Using the online colour extractor on this image generated the below hex codes and their associated colours:

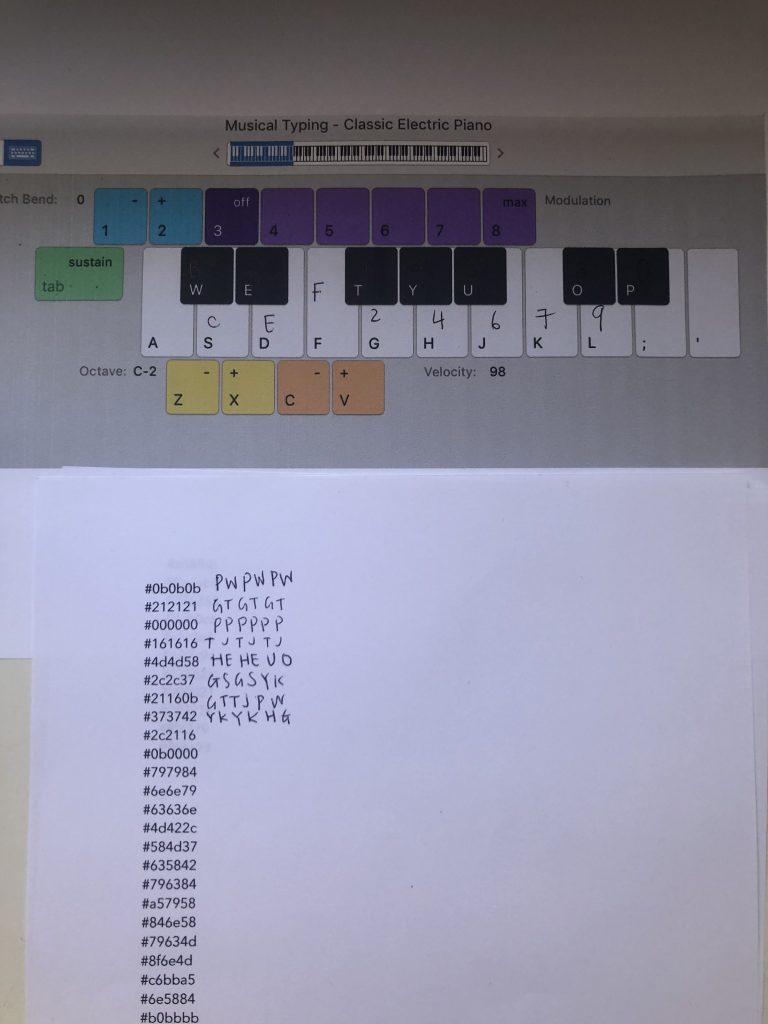

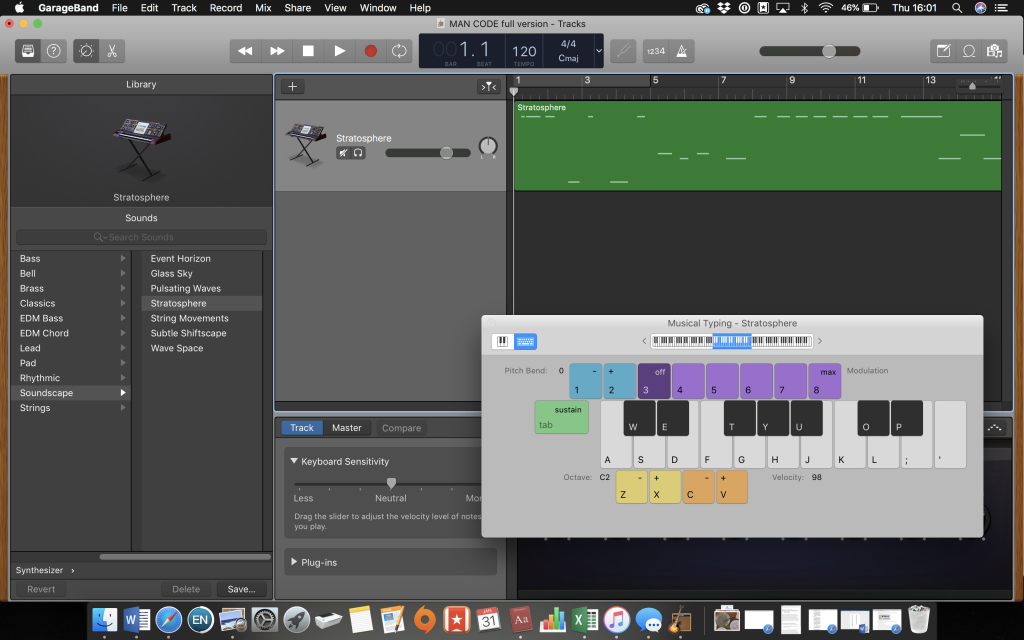

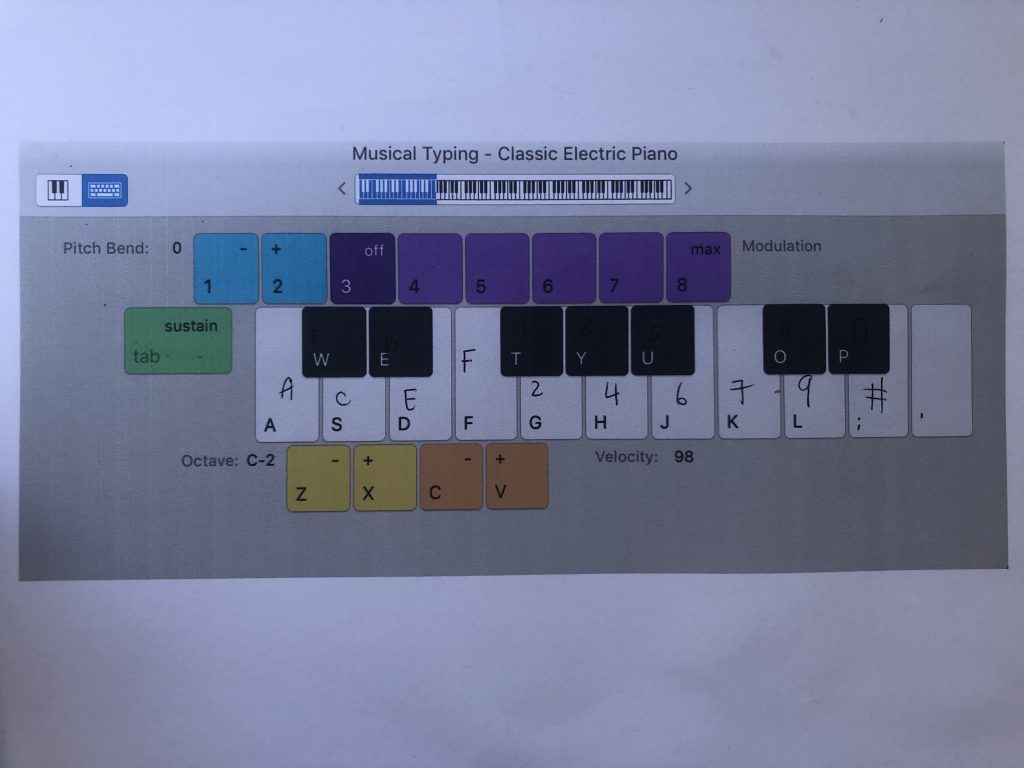

To make the new identity/perception, Man code stratosphere, I isolated the codes and used the keyboard option on GarageBand to assign each letter (a to f) and each number (0 to 9) a note. I left out the hashes for this first part of the experiment, to see if I could generate a more “musical” soundscape, and wanted to keep it as simple as possible, as I’d never used the program before (plus, I’m non-musical to the point of failing the recorder in Grade Three). I also abbreviated the number of codes included to a sample of the first eight codes, this was a choice made with the presentation duration in mind! See the audio file at the top of this page for this experiment sample.

I played around with different instruments on GarageBand before settling on the Stratosphere option in the Synthesiser Soundscape collection. As I mentioned, I’m profoundly not musical, but I wanted to distort the sound past a simple collection of piano notes, which weren’t very interesting (or particularly nice to listen to). I had an idea that I wanted the soundscape to blend and sound somewhat harmonious, but maintain the element of unsettling tunelessness that the original piano notes achieved.

For the second, longer, experiment, I used all of the hex code data, for a full conversion from image to soundscape (see the full version at the top of this page). For this version, I put the hex code data in Word, and then converted each digit of code to their corresponding keyboard command (including the hash this time).

With both of these experiments, audiences are experiencing the image in a very different way to simply looking at it. I have taken the data that makes up the image and used it to create a new way of ‘viewing’ the image through sound. I feel that this process doesn’t so much change the identity of the image, given the fundamental data was identical, but rather the perception of the identity of the image, which is what I set out to achieve with this experiment.

If I were to extend this experiment into more resolved works, which I am quite interested in doing, I’d like to create lengthy tracks that incorporate as many hex codes as possible, for the audience to have the opportunity to ‘hear’ a complete image. I’d like to display the tracks in a space that referenced some elements of sensory deprivation: for example, in a wall-to-wall cushioned room with no light or visual input.